The old sci-fi trope of future humans working alongside robots – before mechanical threats eventually turn on their creators – is as old as the genre itself.

However, for Jamia McQueller, majoring in computer science isn't about being able to build her own R2-D2 device to accompany him, or develop whatever the next smart thing is. Instead, it provides a means to something more important: inclusivity.

“I really got into technology to represent those who have been historically excluded,” McQueller said. “Not having enough representation in any field is scary, but it is especially harmful in technology because we are now creating digital spaces that may or may not be inclusive.

“I want to be involved in helping to create inclusive spaces around race, gender, and sexual orientation. And in tech, I want to say: We belong just as much and our presence here is also purposeful.”

To that end, a junior cybersecurity major from Port Huron has enrolled in the University of Michigan-Flint Undergraduate Research Opportunities Program, which is designed to support collaboration between undergraduate students and faculty researchers.

UROP allows students to gain paid or volunteer practical research experience by working alongside faculty on cutting-edge projects. In addition, faculty members are given the opportunity to mentor motivated and talented students. Due to the diverse nature of the research conducted at UM-Flint, undergraduates at any level and from any academic discipline can participate in UROP.

McQueller, who is expected to graduate in 2025, is using her UROP experience to learn how computer science tools can improve the ways humans can interact alongside robots and in virtual spaces. “I registered for UROP because I aspire to gain more experience and exposure, which will be beneficial in my job search after graduation,” she said.

Teamwork with robots

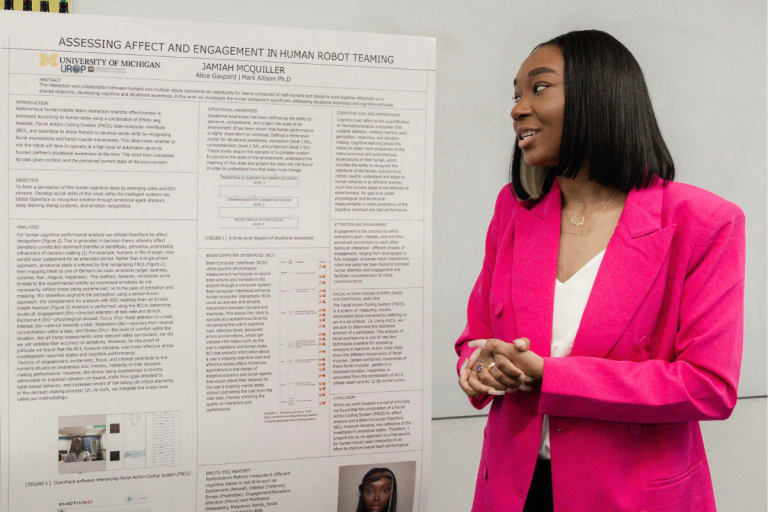

Earlier this year, McQueller assisted Mark Allison, associate professor of computer science, who is part of a larger National Science Foundation-funded research project looking at teamwork and robotics.

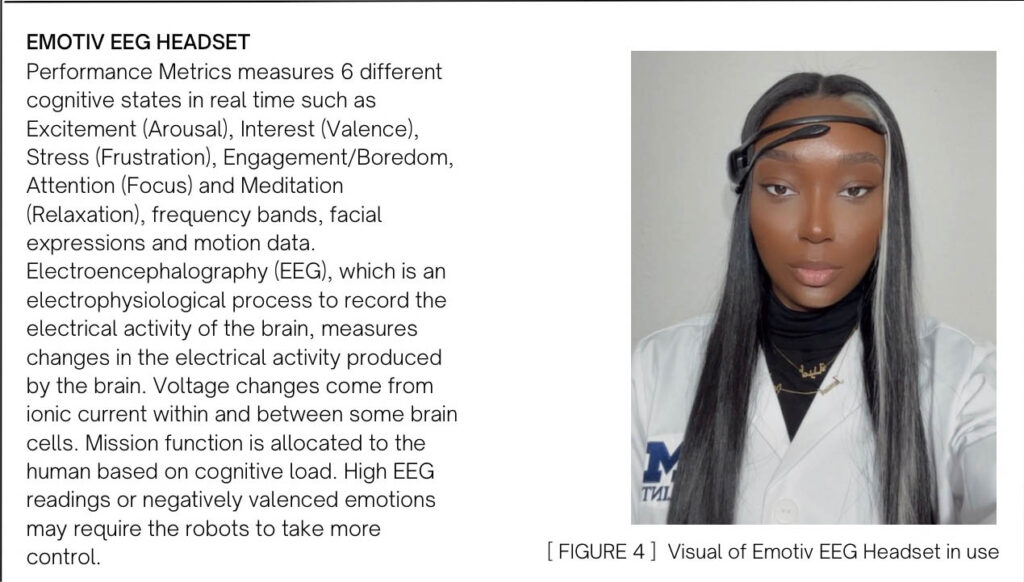

For her part, McQueller helped analyze software that would enable the robot to detect what a person might be feeling using a range of modern data collection tools, including an Emotiv EEG headset, a facial movement coding system, and a brain sensor. – Computer interface, OpenFace.

Some of these tools fall into the realm of autoencoders, a specific type of artificial neural network. From a machine learning perspective, they are designed to learn efficient data encoding of large batches of data and represent them in useful ways by training the network to ignore the “noise” of the signal. The easier way to think about it is that some of these tools can collect all this specialized data about someone that can be useful for understanding how they're feeling, but really only certain parts of them are useful for this task. Our brains do this every day – if we didn't ignore large chunks of data we receive through our senses, our heads would explode.

“Autoencoders find a way for humans and machines to work together in a healthy way,” McQueller said. “This is an important piece of the puzzle.” McQueller also helped analyze how these tools could help robots adapt to fluctuations in mood and stress to the humans around them.

Some may know about the work of famous researcher Paul Ekman, who postulated that some basic human emotions are innate and common to all and that they are accompanied across cultures by universal facial expressions. With this in mind, McQueller used FACS and OpenFace emotion recognition software to distinguish between the six basic emotions of anger, sadness, surprise, fear, disgust, and happiness, since, according to the researchers, they “manifest in the same way in our facial muscles.” “Very reliably.” However, emotional baselines or some cultural differences can skew these results.

After analysis, they found that a combination of FACS and Emotiv tools that pull human emotional data and BCI filter it in a usable way can actually reflect emotional states, a step in improving team performance between robots and humans. Robots currently on the market today have to move at a very slow rate when humans are present. This is unacceptable for today's manufacturers. This project seeks to solve those bottlenecks so that robots can have a kind of situational awareness and adapt to fluctuations in the mood and ability of the humans around them.

We humans do this by nature. In order to learn how to program this science into autoencoders, Allison's team had to gain a deep understanding of how this actually works in humans. “This experience has provided me with a new perspective on emotions,” McQueller said. “Learning about these principles gives you an opportunity to more effectively assess someone's emotional state and cooperate with them.”

Virtual reality and heart models

McQueller currently works as a research assistant in the Computational Medicine and Biomechanics Laboratory at UM-Flint, under the supervision of Yasser Aboulgasim, assistant professor of digital manufacturing technology. Her role in the Abulgasem lab is to develop “patient-specific” virtual reality software that can be used to improve the way cardiovascular disease is visualized, analyzed and diagnosed.

Through this new project, we hope to integrate McQuiller's software tool with virtual reality technology to visualize and analyze 3D cardiac medical images. The process involves taking standard MRI data to build those images from commonly seen images and converting them into 3D models. McQueller then takes those models and inserts them into the virtual surgery center she creates.

“Ultimately, our goal is to enable doctors to detect abnormalities earlier in patients, thus improving medical outcomes,” Abulqasim said.

The developed cardiac VR platform will be patient-specific and is expected to help during the diagnostic stages of patients suffering from cardiovascular diseases. Currently, a radiologist or surgeon must visualize the structure of the aorta by looking at the individual layers and slices of the whole. This technology will enable them to view a comprehensive picture of the aorta, see where the damage is, and adjust their treatment plan with greater confidence.

Taking it a step further, the team is combining real-time data with 4D MRI images, which helps them analyze the movements of blood flow within the arterial network.

For McQuiller, UM-Flint isn't just a place to learn; It's a place to make a difference for herself and others. “This university is a stepping stone to a promising future. Through my research at UROP and at CIT, I aspire to make a tangible impact by enabling doctors’ offices to quickly detect vascular damage and thus save many lives.”